Augmented Intelligence Summit

/in Past Events /by Kirsten Gronlund

Augmented Intelligence Summit

Steering the Future of AI

March 28 – 31, 2019 | 1440 Multiversity | Tuition $495

Today many of the concepts, consequences, and possibilities involved in a future with advanced AI feel distant, uncertain, and abstract. No one has all the answers about how to ensure that powerful AI in the future is beneficial, either in terms of technical implementation or in terms of transference to the domains of law, regulations and policy, industry best practices, or society at large. There are a number of organizations and initiatives that are working on the issues of AI safety, ethics, and governance.

Joining these efforts with a distinct role that bridges academia and industry, the Augmented Intelligence Summit offers a unique, inter-disciplinary approach to learning and creating solutions in this space. We ask: is it possible to develop a collective, concrete, realistic vision of a positive AI future that can inform policy, the development of the industry, and academic research, and can it be done inclusively? Our hypothesis is that this is indeed possible, and we have designed an experiment to test it.

The Summit will provide the tools and framework for a multi-disciplinary group of policy, research, and business leaders to imagine and interact with a simulated model of a positive future for our global society and its major interconnected systems – the economy, education, work, and political structures – through the lens of advanced AI. AIS utilizes a range of collaborative design tools and immersive exercises, including:

- Scenario simulation, war-game style

- World building and collaborative strategic planning exercises

- Presentations on models of economic, social and complex systems to inform our work

The Summit’s high-level goals are about increasing systems-literacy and improving long-term thinking and decision making, which we see as critical skills for navigating the future of advanced AI and its impact on society. In addition to presentations on the state of AI and its trajectory, immersive exercises at the Summit relate to a fictional future world with advanced AI that is both plausible and aspirational. By mentally committing to a specific version of the future, our creativity, our ability to identify important variables, and our overall problem-solving capacity can be enhanced to help us better imagine the societal challenges, opportunities, and needs that will face us and the planning that must be done.

Featured Speakers

Bios for the speakers can be found here.

Agenda

Thursday, March 28th

5:30 pm – 9:00 pm

Opening Keynote: Modeling, Simulation & Changing the Rules of the Game | Gaia Dempsey

Gaia Dempsey is an entrepreneur and pioneer in the field of augmented reality (AR). She is the founder and CEO of 7th Future, a consultancy that partners and co-invests with technology leaders and communities to build and launch global-impact innovation models.

World Building Breakout: “A Day in the Life” Exercise

Small groups imagine a day in the life of a character they co-create that lives on a plausible, aspirational version of Earth in 2045, which we’ve named Earth 2045. The goal of the exercise is to encourage participants to place themselves within the experience of Earth 2045, get familiar with the practice of creating a fictional future, and get to know each other (break the ice).

Friday, March 29th

9:00 am – 9:00 pm

Keynote: AI Futures — Where Are We Headed and How Do We Steer? | Stuart Russell

Stuart will frame the problem we are working to solve at AIS, namely that advanced AI technology represents a vast amplifying capacity for human beings that could veer into dangerous uncharted territory with vast societal implications.

Stuart is a renowned professor and former chair of the electrical engineering and computer sciences department at the University of California, Berkeley. At UC Berkeley, he holds the Smith-Zadeh Chair in engineering and is the director of the Center for Human-Compatible AI.

What’s the Future of Healthcare? | David Haussler

David will present a vision of a plausible, aspirational healthcare system could look like by 2045.

David Haussler serves as the Distinguished Professor of Biomolecular Engineering at the University of California, Santa Cruz, where he directs the Center for Biomolecular Science and Engineering and the California Institute for Quantitative Biosciences (QB3). He is also the scientific co-director for the California Institute for Quantitative Biomedical Research.

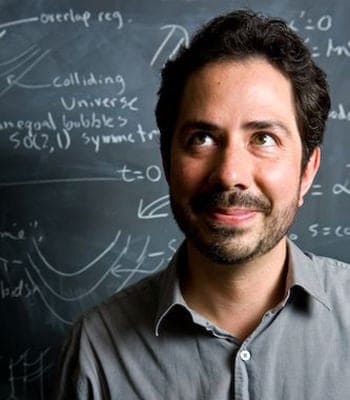

Introduction to World Building | Anthony Aguirre

Anthony Aguirre is a theoretical cosmologist and a Harvard-trained professor of physics at the University of California, Santa Cruz. He cofounded and is the associate scientific director of the nonprofit organization Foundational Questions Institute and is the cofounder of the Future of Life Institute.

World Building Breakout: Designing Interconnected Systems | Ariel Conn

Small groups collaborate on describing the systems that would need to be in place on Earth 2045 to move toward their aspirational vision. Each group focuses on a particular lens, such as neuroethics, democratic processes, economic justice, education, healthcare, manufacturing, etc.

Ariel Conn is the Director of Media and Outreach for the Future of Life Institute. Her work covers a range of fields, including artificial intelligence (AI) safety, AI policy, lethal autonomous weapons, nuclear weapons, biotechnology, and climate change.

What’s the Future of Criminal Justice? | Peter Eckersley

Peter is Director of Research at the Partnership on AI, a collaboration between the major technology companies, civil society and academia to ensure that AI is designed and used to benefit humanity. He leads PAI’s research on machine learning policy and ethics.

Synthesis Session

Facilitation of the large group, sharing and combining the insights they developed in the system-building exercise, identifying areas of confluence and conflict. The evolving description of Earth 2045 is enriched, including a unified timeline of events.

War Game Simulation | Allison Duettmann & Lou Viquerat

The session begins with an “Intro to Existential Hope & Strengthening Civilization” talk from Allison. Next, Red Teams and Blue Teams are created to attack and defend Earth 2045 in multiple rounds.

Allison is a researcher and program coordinator at Foresight Institute, a non-profit institute for technologies of fundamental importance for the future of life. Her research focuses on the reduction of existential risks, especially from artificial general intelligence.

Lou is the Director of Development at Foresight Institute. Lou’s research focuses on alternative governance, justice, and economic systems for a more collaborative, sustainable, and global future society.

Saturday, March 30th

9:00 am – 5:30 pm

Keynote: Complex Adaptive System Modeling — Origins and Applications | David Krakauer

David will orient us toward solution paths for the problem Stuart outlined on Friday, namely the power of systems thinking for improving our understanding of the world, as well as our decision making, long-term thinking, and coordination capacity.

David is President and Professor of Complex Systems at the Santa Fe Institute. His research explores the evolution of intelligence on earth. This includes studying the evolution of genetic, neural, linguistic, social and cultural mechanisms supporting memory and information processing, and exploring their shared properties.

Keynote: The Value of Non-Zero Sum Dynamics | Michael Page

Michael focuses on the long-term social implications of the development and use of advanced artificial intelligence systems. He is policy and ethics advisor at OpenAI a nonprofit AI research company, dedicated to charting a path to safe AI.

Keynote: TBD | Shahar Avin

Scenario Simulation | Shahar Avin

Groups of people on Earth 2045 pursue goals and report back if they are successful. (Similar to a D&D quest).

Shahar is a postdoctoral researcher at the Centre for the Study of Existential Risk (CSER). He works with CSER researchers and others in the global catastrophic risk community to identify and design risk prevention strategies.

Keynote: Redefining Social Welfare: Bridging Preferences Across an Impossible Chasm | Marc Fleurbaey

Marc will provide an overview of contemporary economic theories of justice and introduce us to the most important and promising ideas for social progress identified by the International Panel on Social Progress on structural and systemic issues for the long-term future. Finally, Marc will explore new potential solutions that become viable in economies powered by advanced AI.

Marc is the Robert E. Kuenne Professor in Economics and Humanistic Studies, and a professor of Public Affairs and the University Center for Human Values at Princeton University.

Sunday, March 31st

9:00 am – 1:30 pm

Keynote: TBD | Max Tegmark

Max is a professor doing AI and physics research at MIT, and advocates for positive use of technology as president of the Future of Life Institute.

Global Ethics in AI Development | Marie-Therese Png

Marie-Therese is a doctoral candidate at the Oxford Internet Institute, and PhD research intern at DeepMind Ethics and Society. Her research focuses are Globally Beneficial AI, Intercultural AI Ethics, and Global Justice.

Organizers

Anthony Aguirre, PhD

Physicist | Cofounder of Future of Life Institute | Cofounder of Foundational Questions Institute

Anthony Aguirre is a theoretical cosmologist and a Harvard-trained professor of physics at the University of California, Santa Cruz. He cofounded and is the associate scientific director of the nonprofit organization Foundational Questions Institute and is the cofounder of the Future of Life Institute.

He received his doctorate in astronomy from Harvard University in 2000 and then spent three years as a member of the Institute for Advanced Study in Princeton, New Jersey, before accepting a professorship in the physics department of the University of California, Santa Cruz.

He has worked on a wide variety of topics in theoretical cosmology (the study of the formation, nature, and evolution of the universe), including the early universe and inflation, gravity physics, first stars, the intergalactic medium, galaxy formation, and black holes.

Gaia Dempsey

Founder & CEO of 7th Future

Gaia Dempsey is an entrepreneur and pioneer in the field of augmented reality (AR). She was a cofounder and former managing director at DAQRI, an augmented reality hardware and software company that delivers a complete professional AR platform to the industrial and enterprise market.

Gaia is currently the founder and CEO of 7th Future, a consultancy that partners and co-invests with technology leaders and communities to build and launch global-impact innovation models, with a commitment to openness, integrity, resilience, and long-term thinking.

William Dolphin

CEO of CueSquared

Dr. William Dolphin is an accomplished chief executive officer and senior executive with a history of leading the management and operations of international private and public companies in a range of industries including biotechnology, pharmaceutical, medical device, and information technology.

Currently, he is chief executive officer at CueSquared, which brings developments in artificial intelligence and machine learning to bear on healthcare applications—in particular, to expedite business processes, facilitate access to healthcare, and improve outcomes.

Dr. Dolphin brings a wealth of knowledge and an extensive history of building and growing successful, global businesses, especially to facilitate the delivery of healthcare and improved patient outcomes. He has served as chief executive officer of numerous medical and information technology companies around the world including Omedix, SonaMed Corporation, SpectraNet Ltd., Visiomed Group, and Avita Medical. Additionally, Dr. Dolphin has served as a member of the board of directors for over a dozen public and private companies worldwide.

Tasha McCauley

Board Director of GeoSim Systems

Tasha McCauley is a technology entrepreneur and robotics expert. She is board director at GeoSim Systems, a company centering on a new technology that produces high-resolution, fully interactive virtual models of cities. This novel approach to “reality capture” yields the most detailed VR environments ever created out of data gathered from the real world.

Tasha is also the cofounder of Fellow Robots, a robotics company based at NASA Research Park in Silicon Valley. Formerly on the faculty of Singularity University, she taught students about robotics and was director of the Autodesk Innovation Lab.

She sits on the board of directors of the Ten to the Ninth Plus Foundation, an organization empowering exponential technological change worldwide. Tasha enjoys building things and discovering how things work. She spends a lot of time thinking about how to make user interfaces that facilitate and enhance creativity.

In partnership with

Location

1440 Multiversity | Scotts Valley, CA

Redwood trails, copper-adorned buildings with majestic views, and decks built around sky-high trunks keep you wrapped in nature while on 1440 Multiversity’s 75-acre campus.

Part wellness resort, part Ted-talk auditorium, part conference center, the campus features state-of-the-art classrooms, meeting spaces, and accommodations tucked away in a lush forest between Santa Cruz and Silicon Valley. With locally sourced meals, holistic-health classes, Fitness Center, infinity-edge hot tub, and more, your stay at 1440 is designed to nurture, educate, and inspire.

Nearby Airports:

Mineta San Jose (SJC): 29 miles | approximately 40 – 60 minutes

San Fransisco (SFO): 60 miles | approximately 60 – 75 minutes

Oakland (OAK): 60 miles | approximately 60 – 90 minutes

Transportation suggestions to and from airports will be sent to registered participants prior to the event.

Beneficial AGI 2019

/in Past Events /by Kirsten GronlundBENEFICIAL AGI 2019

After our Puerto Rico AI conference in 2015 and our Asilomar Beneficial AI conference in 2017, we returned to Puerto Rico at the start of 2019 to talk about Beneficial AGI. We couldn’t be more excited to see all of the groups, organizations, conferences and workshops that have cropped up in the last few years to ensure that AI today and in the near future will be safe and beneficial. And so we now wanted to look further ahead to artificial general intelligence (AGI), the classic goal of AI research, which promises tremendous transformation in society. Beyond mitigating risks, we want to explore how we can design AGI to help us create the best future for humanity.

We again brought together an amazing group of AI researchers from academia and industry, as well as thought leaders in economics, law, policy, ethics, and philosophy for five days dedicated to beneficial AI. We hosted a two-day technical workshop to look more deeply at how we can create beneficial AGI, and we followed that with a 2.5-day conference, in which people from a broader AI background considered the opportunities and challenges related to the future of AGI and steps we can take today to move toward an even better future. Honoring the FrieNDA for the conference, we have only posted videos and slides below with approval of the speaker. (See the high-res group photo here.)

Conference Schedule

Friday, January 4

18:00 Early drinks and appetizers

19:00 Conference welcome reception & buffet dinner

20:00 Poster session

Saturday, January 5

Session 1 — Overview Talks

9:00 Welcome & summary of conference plan | FLI Team

Destination:

9:05 Framing the Challenge | Max Tegmark (FLI/MIT)

9:15 Alternative destinations that are popular with participants. What do you prefer?

Technical Safety:

10:00 Provably beneficial AI | Stuart Russell (Berkeley/CHAI) (pdf) (video)

10:30 Technical workshop summary | David Krueger (MILA) (pdf) (video)

10:50 Coffee break

11:00 Strategy/Coordination:

AI strategy, policy, and governance | Allan Dafoe (FHI/GovAI) (pdf) (video)

Towards a global community of shared future in AGI | Brian Tse (FHI) (pdf) (video)

Strategy workshop summary | Jessica Cussins (FLI) (pdf) (video)

12:00 Lunch

13:00 Optional breakouts & free time

Session 2 — Destination | Session Chair: Max Tegmark

16:00 Talk: Summary of discussions and polls about long-term goals, controversies

Panels on the controversies where the participant survey revealed the greatest disagreements:

16:10 Panel: Should we build superintelligence? (video)

Tiejun Huang (Peking)

Tanya Singh (FHI)

Catherine Olsson (Google Brain)

John Havens (IEEE)

Moderator: Joi Ito (MIT)

16:30 Panel: What should happen to humans? (video)

José Hernandez-Orallo (CFI)

Daniel Hernandez (OpenAI)

De Kai (ICSI)

Francesca Rossi (CSER)

Moderator: Carla Gomes (Cornell)

16:50 Panel: Who or what should be in control? (video)

Gaia Dempsey (7th Future)

El Mahdi El Mhamdi (EPFL)

Dorsa Sadigh (Stanford)

Moderator: Meia Chita-Tegmark (FLI)

17:10 Panel: Would we prefer AGI to be conscious? (video)

Bart Selman (Cornell)

Hiroshi Yamakawa (Dwango AI)

Helen Toner (GovAI)

Moderator: Andrew Serazin (Templeton)

17:30 Panel: What goal should civilization strive for? (video)

Joshua Greene (Harvard)

Nick Bostrom (FHI)

Amanda Askell (OpenAI)

Moderator: Lucas Perry (FLI)

18:00 Coffee & snacks

18:20 Panel: If AGI eventually makes all humans unemployable, then how can people be provided with the income, influence, friends, purpose, and meaning that jobs help provide today? (video)

Gillian Hadfield (CHAI/Toronto)

Reid Hoffman (LinkedIn)

James Manyika (McKinsey)

Moderator: Erik Brynjolfsson (MIT)

19:00 Dinner

20:00 Lightning talks

Sunday, January 6

Session 3 — Technical Safety | Session Chair: Victoria Krakovna

Paths to AGI:

9:00 Talk: Challenges towards AGI | Yoshua Bengio (MILA) (pdf) (video)

9:30 Debate: Possible paths to AGI (video)

Yoshua Bengio (MILA)

Irina Higgins (DeepMind)

Nick Bostrom (FHI)

Yi Zeng (Chinese Academy of Sciences)

Moderator: Joshua Tenenbaum (MIT)

10:10 Coffee break

Safety research:

10:25 Talk: AGI safety research agendas | Rohin Shah (Berkeley/CHAI) (pdf) (video)

10:55 Panel: What are the key AGI safety research priorities? (video)

Scott Garrabrant (MIRI)

Daniel Ziegler (OpenAI)

Anca Dragan (Berkeley/CHAI)

Eric Drexler (FHI)

Moderator: Ramana Kumar (DeepMind)

11:30 Debate: Synergies vs. tradeoffs between near-term and long-term AI safety efforts (video)

Neil Rabinowitz (DeepMind)

Catherine Olsson (Google Brain)

Nate Soares (MIRI)

Owain Evans (FHI)

Moderator: Jelena Luketina (Oxford)

12:00 Lunch

13:00 Optional breakouts & free time

15:00 Coffee, snacks, & free time

Session 4 — Strategy & Governance | Session Chair: Anthony Aguirre

16:00 Talk: AGI emergence scenarios, and their relation to destination issues | Anthony Aguirre (FLI)

16:10 Debate: 4-way friendly debate – what would be the best scenario for AGI emergence and early use?

Helen Toner (FHI)

Seth Baum (GCRI)

Peter Eckersley (Partnership on AI)

Miles Brundage (OpenAI)

Moderator: Anthony Aguirre (FLI)

16:50 Talk: AGI – Racing and cooperating | Seán Ó hÉigeartaigh (CSER) (pdf) (video)

17:10 Panel: Where are opportunities for cooperation at the governance level? Can we identify globally shared goals? (video)

Jason Matheny (IARPA)

Charlotte Stix (CFI)

Cyrus Hodes (Future Society)

Bing Song (Berggruen)

Moderator: Danit Gal (Keio)

17:40 Coffee break

17:55 Panel: Where are opportunities for cooperation at the academic & corporate level? (video)

Jason Si (Tencent)

Francesca Rossi (CSER)

Andrey Ustyuzhanin (Higher School of Economics)

Martina Kunz (CFI/CSER)

Teddy Collins (DeepMind)

Moderator: Brian Tse (FHI)

18:25 Talks: Lightning support pitches

19:00 Banquet

20:00 Poster session

Monday, January 7

Session 5 — Action items

9:00 Group discussion and analysis of conference

9:45 Governance panel: What laws, regulations, institutions, and agreement are needed? (video)

Wendell Wallach (Yale)

Nicolas Miailhe (Future Society)

Helen Toner (FHI)

Jeff Cao (Tencent)

Moderator: Tim Hwang (Google)

10:15 Coffee break

10:30 Technical safety student panel: What does the next generation of researchers think that the action items should be? (video)

Jaime Fisac (Berkeley/CHAI)

William Saunders (Toronto)

Smitha Milli (Berkeley/CHAI)

El Mahdi El Mhamdi (EPFL)

Moderator: Dylan Hadfield-Menell (Berkeley/CHAI)

11:10 Prioritization: Everyone e-votes on how to rank and prioritize action items

11:30 Closing remarks

Towards a global community of shared future in AGI | Brian Tse

Towards a global community of shared future in AGI | Brian Tse

Strategy workshop summary | Jessica Cussins

Strategy workshop summary | Jessica Cussins

Panel: Should we build superintelligence?

Panel: Should we build superintelligence?

Lightning talk | Francesca Rossi

Lightning talk | Francesca Rossi

Challenges towards AGI | Yoshua Bengio

Challenges towards AGI | Yoshua Bengio

AGI safety research agendas | Rohin Shah

AGI safety research agendas | Rohin Shah

AGI emergence scenarios, and their relation to destination issues | Anthony Aguirre

AGI emergence scenarios, and their relation to destination issues | Anthony Aguirre

Panel: Where are opportunities for cooperation at the governance level?

Panel: Where are opportunities for cooperation at the governance level?

Panel: Where are opportunities for cooperation at the academic & corporate level?

Panel: Where are opportunities for cooperation at the academic & corporate level?

Governance Panel: What laws, regulations, institutions, and agreement are needed?

Governance Panel: What laws, regulations, institutions, and agreement are needed?

Workshop Schedule

Wednesday, January 2

18:00 Workshop welcome reception, 3-word introductions

Thursday, January 3

9:00 Welcome & summary of workshop plan | FLI Team

Destination Session | Session Chair: Max Tegmark

9:15 Talk: Framing, breakout task assignment

9:30 Breakouts: Parallel group brainstorming sessions

10:45 Coffee break

11:00 Discussion: Destination group reports, debate between panel rapporteurs

12:00 Lunch

13:00 Optional breakouts & free time

15:00 Coffee, snacks, & free time

Technical Safety Session | Session Chair: Victoria Krakovna

16:00 Opening remarks

Talks about latest progress on human-in-the-loop approaches to AI safety

16:05 Talk: Scalable agent alignment | Jan Leike (DeepMind) (pdf)

16:20 Talk: Iterated amplification and debate | Amanda Askell (OpenAI) (pdf)

16:35 Talk: Cooperative inverse reinforcement learning | Dylan Hadfield-Menell (Berkeley/CHAI)

16:50 Talk: The comprehensive AI services framework | Eric Drexler (FHI) (pdf)

17:05 Coffee break

Talks about latest progress on theory approaches to AI safety

17:25 Talk: Embedded agency | Scott Garrabrant (MIRI) (pdf)

17:40 Talk: Measuring side effects | Victoria Krakovna (DeepMind) (pdf)

17:55 Talk: Verification/security/containment | Ramana Kumar (DeepMind) (pdf)

18:10 Coffee break

18:30 Debate: Will future AGI systems be optimizing a single long-term goal?

Rohin Shah (Berkeley/CHAI)

Peter Eckersley (Partnership on AI)

Anna Salamon (CFAR)

Moderator: David Krueger (MILA)

19:00 Dinner

20:30 Destinations groups finish their work as needed

Friday, January 4

Strategy Session | Session Chair: Anthony Aguirre

9:00 Welcome back & framing of strategy challenge

9:10 Talk: Exploring AGI scenarios | Shahar Avin (FHI) (pdf)

9:40 Forecasting: What scenario ingredients are most likely and why? What goes into answering this?

Jade Leung (FHI)

Danny Hernandez (OpenAI)

Gillian Hadfield (CHAI/Toronto)

Malo Bourgon (MIRI)

Moderator: Shahar Avin (FHI)

10:10 Coffee break

10:20 Scenario breakouts

11:20 Reports: Scenario report-backs & discussion panel(s), assignments to action item panels

12:00 Lunch

13:00 Optional breakouts & free time

15:00 Coffee & free time

Action Item Session

15:45 Panel: Destination synthesis – what did all groups agree on, and what are the key controversies worth clarifying? Action items?

Tegan Maharaj (MILA)

Alex Zhu (MIRI)

16:15 Panel: What might the unknown unknowns in the space of AI safety problems look like? How can we broaden our research agendas to capture them?

Jan Leike (DeepMind)

Victoria Krakovna (DeepMind)

Richard Mallah (FLI)

Moderator: Andrew Critch (Berkeley/CHAI)

16:45 Coffee break

17:00 Action items: What institutions, platforms, & organizations do we need?

Jaan Tallinn (CSER/FLI)

Tanya Singh (FHI)

Alex Zhu (MIRI)

Moderator: Allison Duettmann (Foresight)

17:30 Action items: What standards, laws, regulations, & agreements do we need?

Jessica Cussins (FLI)

Teddy Collins (DeepMind)

Gillian Hadfield (CHAI/Toronto)

Moderator: Allan Dafoe (FHI/GovAI)

18:00 Conference welcome reception & buffet dinner

20:00 Poster session

There are no videos from the workshop portion of the conference.

Iterated amplification and debate | Amanda Askell

Iterated amplification and debate | Amanda Askell

Measuring side effects | Victoria Krakovna

Measuring side effects | Victoria Krakovna

Exploring AGI scenarios | Shahar Avin

Exploring AGI scenarios | Shahar Avin

Forecasting: What scenario ingredients are most likely and why?

Forecasting: What scenario ingredients are most likely and why?

Action items: What institutions, platforms, & organizations do we need?

Action items: What institutions, platforms, & organizations do we need?

Organizers

Anthony Aguirre, Meia Chita-Tegmark, Ariel Conn, Tucker Davey, Victoria Krakovna, Richard Mallah, Lucas Perry, Lucas Sabor, Max Tegmark

Participants

Bios for the attendees and participants can be found here.

2018 Spring Conference: Invest in Minds Not Missiles

/in Conferences and workshops, Events, Nuclear, Past Events, recent news /by The FLI TeamOn Saturday April 7th and Sunday morning April 8th, MIT and Massachusetts Peace Action will co-host a conference and workshop at MIT on understanding and reducing the risk of nuclear war. Tickets are free for students. To attend, please register here.

Saturday sessions

- Continuing Dangers from Nuclear Weapons

- International Initiatives Toward Disarmament

- Joseph Gerson, Hon. John Tierney, Charles Knight, Chuck Johnson, Jim Anderson (Chair).

- Political Initiatives

- John Tierney, Medea Benjamin, Kristina Romines, Jonathan King, Andrea Miller (Chair).

- Campus Organizing for Peace & Justice: What Works? What Doesn’t? Where Next?

- Campus Peace leaders including Caitlin Forbes (Mass Peace Action), Kate Alexander (Peace Action of NY), Eric Stolar (Fordham), Luisa Kenausis (MIT).

- Actions for the Coming Period: Shout Heard Round the World

- Michelle Cunha, Poor Peoples 40 Day Campaign (Paul Johnson), Peace for Koreans (Mike Vanelzakker), Cole Harrison (Chair).

Workshops

- Resisting the Trillion dollar Nuclear Weapons Escalation

- Congressional Budget-Civilian vs Pentagon

- Don’t Bank on the Bomb Divestment Campaigns

- Preventing Nuclear Weapons Use

- Lisbeth Gronlund, Dr. Kea van der Ziel, Prof. Elaine Scarry, Shelagh Foreman, Jerold Ross (Chair).

Sunday Morning Planning Breakfast

Student-led session to design and implement programs enhancing existing campus groups, and organizing new ones; extending the network to campuses in Rhode Island, Connecticut, New Jersey, New Hampshire, Vermont and Maine.

For more information, contact Jonathan King at <jaking@mit.edu>, or call 617-354-2169

Beneficial AI 2017

/in Past Events /by Ariel ConnBeneficial AI 2017

In our sequel to the 2015 Puerto Rico AI conference, we brought together an amazing group of AI researchers from academia and industry, and thought leaders in economics, law, ethics, and philosophy for five days dedicated to beneficial AI. We hosted a two-day workshop for our grant recipients to give them an opportunity to highlight and discuss the progress with their grants. We followed that with a 2.5-day conference, in which people from various AI-related fields hashed out opportunities and challenges related to the future of AI and steps we can take to ensure that the technology is beneficial. Honoring the FrieNDA for the conference, we are only posting videos and slides below with approval of the speaker. Learn more about the Asilomar AI Principles that resulted from the conference, the process involved in developing them, and the resulting discussion about each principle. (It looks like we’ll be posting almost everything, but please be patient while we finish editing and uploading videos, etc.)

Conference Schedule

Thursday January 5

All afternoon: registration open; come chill & meet old and new friends!

1800-2100: Welcome reception

Friday January 6

0730-0900: Breakfast

0900-1200: Opening keynotes on AI, economics & law: Progress since Puerto Rico 2015

- Welcome Remarks by Max Tegmark (video)

- Talks:

- Group photo

1200-1300: Lunch

1300-1500: Breakout sessions

1500-1800: Economics: How can we grow our prosperity through automation without leaving people lacking income or purpose?

- Talk: Daniela Rus (MIT) (video)

- Panel with Daniela Rus (MIT), Andrew Ng (Baidu), Mustafa Suleyman (DeepMind), Moshe Vardi (Rice) & Peter Norvig (Google): How AI is automating and augmenting work (video)

- Talks:

- Panel with Andrew McAfee (MIT), Jeffrey Sachs (Columbia), Eric Schmidt (Google) & Reid Hoffman (LinkedIn): Implications of AI for the Economy and Society (video)

- Fireside chat with Daniel Kahneman: What makes people happy? (video)

1800-2100: Dinner

Saturday January 7

0730-0900: Breakfast

0900-1200: Creating human-level AI: Will it happen, and if so, when and how? What key remaining obstacles can be identified? How can we make future AI systems more robust than today’s, so that they do what we want without crashing, malfunctioning or getting hacked?

- Talks:

- Panel with Anca Dragan (Berkeley), Demis Hassabis (DeepMind), Guru Banavar (IBM), Oren Etzioni (Allen Institute), Tom Gruber (Apple), Jürgen Schmidhuber (Swiss AI Lab), Yann LeCun (Facebook/NYU), Yoshua Bengio (Montreal) (video)

1200-1300: Lunch

1300-1500: Breakout sessions

1500-1800: Superintelligence: Science or fiction? If human level general AI is developed, then what are likely outcomes? What can we do now to maximize the probability of a positive outcome? (video)

- Talks:

- Panel with Bart Selman (Cornell), David Chalmers (NYU), Elon Musk (Tesla, SpaceX), Jaan Tallinn (CSER/FLI), Nick Bostrom (FHI), Ray Kurzweil (Google), Stuart Russell (Berkeley), Sam Harris, Demis Hassabis (DeepMind): If we succeed in building human-level AGI, then what are likely outcomes? What would we like to happen?

- Panel with Dario Amodei (OpenAI), Nate Soares (MIRI), Shane Legg (DeepMind), Richard Mallah (FLI), Stefano Ermon (Stanford), Viktoriya Krakovna (DeepMind/FLI): Technical research agenda: What can we do now to maximize the chances of a good outcome? (video)

1800-2200: Banquet

Sunday January 8

0730-0900: Breakfast

0900-1200: Law, policy & ethics: How can we update legal systems, international treaties and algorithms to be more fair, ethical and efficient and to keep pace with AI?

- Talks:

- Panel with Martin Rees (CSER/Cambridge), Heather Roff-Perkins, Jason Matheny (IARPA), Steve Goose (HRW), Irakli Beridze (UNICRI), Rao Kambhampati (AAAI, ASU), Anthony Romero (ACLU): Policy & Governance (video)

- Panel with Kate Crawford (Microsoft/MIT), Matt Scherer, Ryan Calo (U. Washington), Kent Walker (Google), Sam Altman (OpenAI): AI & Law (video)

- Panel with Kay Firth-Butterfield (IEEE, Austin-AI), Wendell Wallach (Yale), Francesca Rossi (IBM/Padova), Huw Price (Cambridge, CFI), Margaret Boden (Sussex): AI & Ethics (video)

1200-1300: Lunch

1300: Depart

Workshop Schedule

Tuesday January 3

All afternoon: workshop registration open; come chill & meet old and new friends!

1800-2100: Welcome reception, breakout session sign-up

Wednesday January 4

0730-0900: Breakfast

0900-1200: Talks & discussions

- Grant-winner talks on value learning: Stuart Russell (Berkeley) (pdf), Francesca Rossi (IBM/Padova) (pdf), Owain Evans (FHI) (pdf), Adrian Weller (Cambridge), Vincent Conitzer (Duke) (pdf)

- Panel with above speakers: Future directions in value learning

- Panel with Tom Dietterich, Manuela Veloso, Bart Selman & Jürgen Schmidhuber: Will we ever build human-level AI?

1200-1300: Lunch

1300-1400: Lightning talks: Dario Amodei (OpenAI) (pdf), Catherine Olsson (OpenAI) (pdf), Andrew Critch (CHCAI/MIRI) (link), Eric

Drexler (MIT), Allan Dafoe (Yale), Jürgen Schmidhuber (Swiss AI Lab) (pdf), Toby Walsh (UNSW) (pdf)

1400-1500: Breakout session A

1500-1800: Talks & discussions

- Grant-winner talks on verification, safe self-modification, and complexity: Bart Selman (Cornell) (pdf), Stefano Ermon (Stanford) (pdf), Katja Grace (MIRI), Andrew Critch (CHCAI/MIRI) (pdf), Ramana Kumar (Data61/CSIRO/UNSW) (pdf) & Bas Steunebrink (IDSIA) (pdf)

- Panel with above speakers: Future directions in these areas

- Panel with above speakers and Toby Walsh: Recursive self-improvement: fast takeoff, slow takeoff or no takeoff?

1800-2100: Dinner

Thursday January 5

0730-0900: Breakfast

0900-1200: Talks & discussions

- Grant-winner talks on control, uncertainty identification & management: Thomas Dietterich (OSU) (pdf), Manuela Veloso (Carnegie Mellon) (pdf), Percy Liang (Stanford) (pdf), Long Ouyang (pdf), Paul Christiano (OpenAI) (pdf), Fuxin Li (OSU) (pdf) & Brian Ziebart (U. Chicago) (pdf)

- Panel with above speakers: Future directions in these areas

- “Red-team” Panel with Dario Amodei (OpenAI), Eric Drexler (MIT), Jan Leike (FHI/DeepMind), Percy Liang (Stanford) & Eliezer Yudkowsky (MIRI): What could go wrong with AGI and how can these problems be avoided?

1200-1300: Lunch

1300-1400: Breakout session B

1400-1500: Breakout session C

1500-1800: Talks & discussions

- Grant-winner talks on governance & ethics: Nick Bostrom (Oxford), Wendell Wallach (Yale) (pdf), Heather Roff Perkins (Oxford/ASU) (pdf), Peter Asaro (New School) (pdf) & Moshe Vardi (Rice) (pdf)

- Grant-winner talks on education: Anna Salamon (CFAR) & Paul Christiano (OpenAI) (pdf)

- Panel with Anna Salamon (CFAR) & Paul Christiano (OpenAI): Educating and mentoring AI safety researchers (Moderator: Andrew Critch)

- Grant-winner talk: Owen Cotton-Barratt (GPP) (pdf)

- Panel with Allan Dafoe (Yale), Anthony Aguirre (FLI) & Owen Cotton-Barratt (GPP): Prediction (Moderator: Jaan Tallinn (FLI/CSER))

1800-2100: Welcome reception for Beneficial AI 2017 conference

Scientific Organizing Committee

Erik Brynjolfsson, MIT, Professor at the MIT Sloan School of Management, co-author of The Second Machine Age

Eric Horvitz, Microsoft, co-chair of the AAAI presidential panel on long-term AI futures

Peter Norvig, Google, Director of Research, co-author of the standard textbook Artificial Intelligence: a Modern Approach

Francesca Rossi, Univ. Padova, Professor of Computer Science, IBM, President of the International Joint Conference on Artificial Intelligence

Stuart Russell, UC Berkeley, Professor of Computer Science, director of the Center for Intelligent Systems, and co-author of the standard textbook Artificial Intelligence: a Modern Approach

Bart Selman, Cornell University, Professor of Computer Science, co-chair of the AAAI presidential panel on long-term AI futures

Max Tegmark, MIT, Professor of Physics

Local Organizers

Anthony Aguirre, Meia Chita-Tegmark, Ariel Conn, Tucker Davey, Lucas Perry, Viktoriya Krakovna, Janos Kramar, Richard Mallah, Max Tegmark.

Sponsors

A special thank you to our generous sponsors: Alexander Tamas; The Center for Brains, Minds, and Machines; Elon Musk; Jaan Tallinn; and The Open Philanthropy Project.

Bios for the attendees and participants can be found here.

Standing Row: Patrick Lin, Daniel Weld, Ariel Conn, Nancy Chang, Tom Mitchell, Ray Kurzweil, Daniel Dewey, Margaret Boden, Peter Norvig, Nick Hay, Moshe Vardi, Scott Siskind, Nick Bostrom, Francesca Rossi, Shane Legg, Manuela Veloso, David Marble, Katja Grace, Irakli Beridze, Marty Tenenbaum, Gill Pratt, Martin Rees, Joshua Greene, Matt Scherer, Angela Kane, Amara Angelica, Jeff Mohr, Mustafa Suleyman, Steve Omohundro, Kate Crawford, Vitalik Buterin, Yutaka Matsuo, Stefano Ermon, Michael Wellman, Bas Steunebrink, Wendell Wallach, Allan Dafoe, Toby Ord, Thomas Dietterich, Daniel Kahneman, Dario Amodei, Eric Drexler, Tomaso Poggio, Eric Schmidt, Pedro Ortega, David Leake, Sean O’Heigeartaigh, Owain Evans, Jaan Tallinn, Anca Dragan, Sean Legassick, Toby Walsh, Peter Asaro, Kay Firth-Butterfield, Philip Sabes, Paul Merolla, Bart Selman, Tucker Davey, ?, Jacob Steinhardt, Moshe Looks, Josh Tenenbaum, Tom Gruber, Andrew Ng, Kareem Ayoub, Craig Calhoun, Percy Liang, Helen Toner, David Chalmers, Richard Sutton, Claudia Passos-Ferriera, Janos Kramar, William MacAskill, Eliezer Yudkowsky, Brian Ziebart, Huw Price, Carl Shulman, Neil Lawrence, Richard Mallah, Jurgen Schmidhuber, Dileep George, Jonathan Rothberg, Noah Rothberg

Sitting Row: Anthony Aguirre, Sonia Sachs, Lucas Perry, Jeffrey Sachs, Vincent Conitzer, Steve Goose, Victoria Krakovna, Owen Cotton-Barratt, Daniela Rus, Dylan Hadfield-Menell, Verity Harding, Shivon Zilis, Laurent Orseau, Ramana Kumar, Nate Soares, Andrew McAfee, Jack Clark, Anna Salamon, Long Ouyang, Andrew Critch, Paul Christiano, Yoshua Bengio, David Sanford, Catherine Olsson, Jessica Taylor, Martina Kunz, Kristinn Thorisson, Stuart Armstrong, Yann LeCun, Alexander Tamas, Roman Yampolskiy, Marin Soljacic, Lawrence Krauss, Stuart Russell, Eric Brynjolfsson, Ryan Calo, ShaoLan Hsueh, Meia Chita-Tegmark, Kent Walker, Heather Roff, Meredith Whittaker, Max Tegmark, Adrian Weller, Jose Hernandez-Orallo, Andrew Maynard, John Hering, Abram Demski, Nicolas Berggruen, Gregory Bonnet, Sam Harris, Tim Hwang, Andrew Snyder-Beattie, Marta Halina, Sebastian Farquhar, Stephen Cave, Jan Leike, Tasha McCauley, Joseph Gordon-Levitt

Not in photo: Guru Banavar, Sam Teller, Anthony Romero, Elon Musk, Larry Page, Sam Altman, Oren Etzioni, Chelsea Finn, Ian Goodfellow, Reid Hoffman, Holden Karnofsky, Sergey Levine, Fuxin Li, Jason Matheny, Andrew Serazin, Ilya Sutskever

The view from the conference:

AI safety conference in Puerto Rico

/2 Comments/in AI, Conferences and workshops, Featured, FLI projects, Past Events /by adminThe Future of AI: Opportunities and Challenges

This conference brought together the world’s leading AI builders from academia and industry to engage with each other and experts in economics, law and ethics. The goal was to identify promising research directions that can help maximize the future benefits of AI while avoiding pitfalls (see this open letter and this list of research priorities). To facilitate candid and constructive discussions, there was no media present and Chatham House Rules: nobody’s talks or statements will be shared without their permission.

Where? San Juan, Puerto Rico

When? Arrive by evening of Friday January 2, depart after lunch on Monday January 5 (see program below)

Scientific organizing committee:

- Erik Brynjolfsson, MIT, Professor at the MIT Sloan School of Management, co-author of The Second Machine Age: Work, Progress, and Prosperity in a Time of Brilliant Technologies

- Demis Hassabis, Founder, DeepMind

- Eric Horvitz, Microsoft, co-chair of the AAAI presidential panel on long-term AI futures

- Shane Legg, Founder, DeepMind

- Peter Norvig, Google, Director of Research, co-author of the standard textbook Artificial Intelligence: a Modern Approach.

- Francesca Rossi, Univ. Padova, Professor of Computer Science, President of the International Joint Conference on Artificial Intelligence

- Stuart Russell, UC Berkeley, Professor of Computer Science, director of the Center for Intelligent Systems, and co-author of the standard textbook Artificial Intelligence: a Modern Approach.

- Bart Selman, Cornell University, Professor of Computer Science, co-chair of the AAAI presidential panel on long-term AI futures

- Murray Shanahan, Imperial College, Professor of Cognitive Robotics

- Mustafa Suleyman, Founder, DeepMind

- Max Tegmark, MIT, Professor of physics, author of Our Mathematical Universe

Local Organizers:

Anthony Aguirre, Meia Chita-Tegmark, Viktoriya Krakovna, Janos Kramar, Richard Mallah, Max Tegmark, Susan Young

Support: Funding and organizational support for this conference is provided by Skype-founder Jaan Tallinn, the Future of Life Institute and the Center for the Study of Existential Risk.

PROGRAM

Friday January 2:

1600-late: Registration open

1930-2130: Welcome reception (Las Olas Terrace)

Saturday January 3:

0800-0900: Breakfast

0900-1200: Overview (one review talk on each of the four conference themes)

• Welcome

• Ryan Calo (Univ. Washington): AI and the law

• Erik Brynjolfsson (MIT): AI and economics (pdf)

• Richard Sutton (Alberta): Creating human-level AI: how and when? (pdf)

• Stuart Russell (Berkeley): The long-term future of (artificial) intelligence (pdf)

1200-1300: Lunch

1300-1515: Free play/breakout sessions on the beach

1515-1545: Coffee & snacks

1545-1600: Breakout session reports

1600-1900: Optimizing the economic impact of AI

(A typical 3-hour session consists of a few 20-minute talks followed by a discussion panel where the panelists who haven’t already given talks get to give brief introductory remarks before the general discussion ensues.)

What can we do now to maximize the chances of reaping the economic bounty from AI while minimizing unwanted side-effects on the labor market?

Speakers:

• Andrew McAfee, MIT (pdf)

• James Manyika, McKinsey (pdf)

• Michael Osborne, Oxford (pdf)

Panelists include Ajay Agrawal (Toronto), Erik Brynjolfsson (MIT), Robin Hanson (GMU), Scott Phoenix (Vicarious)

1900: dinner

Sunday January 4:

0800-0900: Breakfast

0900-1200: Creating human-level AI: how and when?

Short talks followed by panel discussion: will it happen, and if so, when? Via engineered solution, whole brain emulation, or other means? (We defer until the 4pm session questions regarding what will happen, about whether machines will have goals, about ethics, etc.)

Speakers:

• Demis Hassabis, Google/DeepMind

• Dileep George, Vicarious (pdf)

• Tom Mitchell, CMU (pdf)

Panelists include Joscha Bach (MIT), Francesca Rossi (Padova), Richard Mallah (Cambridge Semantics), Richard Sutton (Alberta)

1200-1300: Lunch

1300-1515: Free play/breakout sessions on the beach

1515-1545: Coffee & snacks

1545-1600: Breakout session reports

1600-1900: Intelligence explosion: science or fiction?

If an intelligence explosion happens, then what are likely outcomes? What can we do now to maximize the probability of a positive outcome? Containment problem? Is “friendly AI” possible? Feasible? Likely to happen?

Speakers:

• Nick Bostrom, Oxford (pdf)

• Bart Selman, Cornell (pdf)

• Jaan Tallinn, Skype founder (pdf)

• Elon Musk, SpaceX, Tesla Motors

Panelists include Shane Legg (Google/DeepMind), Murray Shanahan (Imperial), Vernor Vinge (San Diego), Eliezer Yudkowsky (MIRI)

1930: banquet (outside by beach)

Monday January 5:

0800-0900: Breakfast

0900-1200: Law & ethics: Improving the legal framework for autonomous systems

How should legislation be improved to best protect the AI industry and consumers? If self-driving cars cut the 32000 annual US traffic fatalities in half, the car makers won’t get 16000 thank-you notes, but 16000 lawsuits. How can we ensure that autonomous systems do what we want? And who should be held liable if things go wrong? How tackle criminal AI? AI ethics? AI ethics/legal framework for military systems & financial systems?

Speakers:

• Joshua Greene, Harvard (pdf)

• Heather Roff Perkins, Univ. Denver (pdf)

• David Vladeck, Georgetown

Panelists include Ryan Calo (Univ. Washington), Tom Dietterich (Oregon State, AAAI president), Kent Walker (General Counsel, Google)

1200: Lunch, depart

PARTICIPANTS

You’ll find a list of participants and their bios here.

Back row, from left to right: Tom Mitchell, Seán Ó hÉigeartaigh, Huw Price, Shamil Chandaria, Jaan Tallinn, Stuart Russell, Bill Hibbard, Blaise Agüera y Arcas, Anders Sandberg, Daniel Dewey, Stuart Armstrong, Luke Muehlhauser, Tom Dietterich, Michael Osborne, James Manyika, Ajay Agrawal, Richard Mallah, Nancy Chang, Matthew Putman

Other standing, left to right: Marilyn Thompson, Rich Sutton, Alex Wissner-Gross, Sam Teller, Toby Ord, Joscha Bach, Katja Grace, Adrian Weller, Heather Roff-Perkins, Dileep George, Shane Legg, Demis Hassabis, Wendell Wallach, Charina Choi, Ilya Sutskever, Kent Walker, Cecilia Tilli, Nick Bostrom, Erik Brynjolfsson, Steve Crossan, Mustafa Suleyman, Scott Phoenix, Neil Jacobstein, Murray Shanahan, Robin Hanson, Francesca Rossi, Nate Soares, Elon Musk, Andrew McAfee, Bart Selman, Michele Reilly, Aaron VanDevender, Max Tegmark, Margaret Boden, Joshua Greene, Paul Christiano, Eliezer Yudkowsky, David Parkes, Laurent Orseau, JB Straubel, James Moor, Sean Legassick, Mason Hartman, Howie Lempel, David Vladeck, Jacob Steinhardt, Michael Vassar, Ryan Calo, Susan Young, Owain Evans, Riva-Melissa Tez, János Kramár, Geoff Anders, Vernor Vinge, Anthony Aguirre

Seated: Sam Harris, Tomaso Poggio, Marin Soljačić, Viktoriya Krakovna, Meia Chita-Tegmark

Behind the camera: Anthony Aguirre (and also photoshopped in by the human-level intelligence on his left)

Click for a full resolution version.

Policy Exchange: Co-organized with CSER

/in Past Events /by adminThis event occurred on September 1, 2015.

When one of the world’s leading experts in Artificial Intelligence makes a speech suggesting that a third of existing British jobs could be made obsolete by automation, it is time for think tanks and the policymaking community to take notice. This observation – by Associate Professor of Machine Learning at the University of Oxford, Michael Osborne – was one of many thought provoking comments made at a special event on the policy implications of the rise of AI we held this week with the Cambridge Centre for the Study of Existential Risk.

The event formed part of Policy Exchange’s long-running research programme looking at reforms that are needed in policies relating to welfare and the workplace – as well as other long-term challenges facing the country. We gathered together the world’s leading authorities on AI to consider the rise of this new technology, which will form one of the greatest challenges facing our society in this century. The speakers were the following:

• Huw Price, Bertrand Russell Professor of Philosophy and a Fellow of Trinity College at the University of Cambridge, and co-founder of the Centre for the Study of Existential Risk.

• Stuart Russell, Professor at the University of California at Berkeley and author of the standard textbook on AI.

• Nick Bostrom, Professor at Oxford University, author of “Superintelligence” and Founding Director of the Future of Humanity Institute.

• Michael Osborne. Associate Professor at Oxford University and co-director of the Oxford Martin programme on Technology and Employment.

• Murray Shanahan. Professor at Imperial College London, scientific advisor to the film Ex Machina, and author of “The Technological Singularity”

In this bulletin, we ask two of our speakers to share their views about the potential and risks from future AI:

Michael Osborne: Machine Learning and the Future of Work

Machine learning is the study of algorithms that can learn and act. Why use a machine when we already have over six billion humans to choose from? One reason is that algorithms are significantly cheaper, and becoming ever more so. But just as important, algorithms can often do better than humans, avoiding the biases that taint human decision making.

There are big benefits to be gained from the rise of the algorithms. Big data is already leading to programs that can handle increasingly sophisticated tasks, such as translation. Computational health informatics will transform health monitoring, allowing us to release patients from their hospital beds much earlier and freeing up resources in the NHS. Self-driving cars will allow us to cut down on the 90% of traffic accidents caused by human error, while the data generated by their constant monitoring of the impact will have big consequences for mapping, insurance, and the law.

Nevertheless, there will be big challenges from the disruption automation creates. New technologies derived from mobile machine learning and robotics threaten employment in logistics, sales and clerical occupations. Over the next few decades, 47% of jobs in America are at high risk of automation, and 35% of jobs in the UK. Worse, it will be the already vulnerable who are most at risk, while high-skilled jobs are relatively resistant to computerisation. New jobs are emerging to replace the old, but only slowly – only 0.5% of the US workforce is employed in new industries created in the 21st century.

Policy makers are going to have to do more to ensure that we can all share in the great prosperity promised by technology.

Stuart Russell: Killer Robots, the End of Humanity, and All That: Policy Implications

Everything civilisation offers is the product of intelligence. If we can use AI to amplify our intelligence, the benefits to humanity are potentially immeasurable.

The good news is that progress is accelerating. Solid theoretical foundations, more data and computing, and huge investments from private industry have created a virtuous cycle of iterative improvement. On the current trajectory, further real-world impact is inevitable.

Of course, not all impact is good. As technology companies unveil ever more impressive demos, newspapers have been full of headlines warning of killer robots, or the loss of half of all jobs, or even the end of humanity. But how credible exactly are these nightmare scenarios? The short answer is we should not panic, but there are real risks that are worth taking seriously.

In the short term, lethal autonomous weapons or weapon systems that can select and fire upon targets on their own are worth taking seriously. According to defence experts, including the Ministry of Defence, these are probably feasible now, and they have already been the subject of three UN meetings in 2014-5. In the future, they are likely to be relatively cheap to mass produce, potentially making them much harder to control or contain than nuclear weapons. A recent open letter from 3,000 AI researchers argued for a total ban on the technology to prevent the start of a new arms race.

Looking further ahead, what, however, if we succeed in creating an AI system that can make decisions as well, or even significantly better than humans? The first thing to say is that we are several conceptual breakthroughs away from constructing such a general artificial intelligence, as compared to the more specific algorithms needed for an autonomous weapon or self-driving car.

It is highly unlikely that we will be able to create such an AI within the next five to ten years, but then conceptual breakthroughs are by their very nature hard to predict. The day before Leo Szilard conceived of neutron-induced chain reactions, the key to nuclear power, Lord Rutherford was claiming that, “anyone who looks for a source of power in the transformation of the atoms is talking moonshine.”

The danger from such a “superintelligent” AI is that it would not by default share the same goals as us. Even if we could agree amongst ourselves what the best human values were, we do not understand how to reliably formalise them into a programme. If we accidentally give a superintelligent AI the wrong goals, it could prove very difficult to stop.

For example, for many benign-sounding final goals we might try to give the computer, two plausible intermediate goals for an AI are to gain as many physical resources as possible and to refuse to allow itself to be terminated. We might think that we are just asking the machine to calculate as many digits of pi as possible, but it could judge that the best way to do so is turn the whole Earth into a supercomputer.

In short, we are moving with increasing speed towards what could be the biggest event in human history. Like global warming, there are significant uncertainties involved and the pain might not come for another few decades – but equally like global warming, the sooner we start to look at potential solutions the more likely we are to be successful. Given the complexity of the problem, we need much more technical research on what an answer might look like.

Martin Rees: Catastrophic Risks: The Downsides of Advancing Technology

/in Past Events /by adminThis event was held Thursday, November 6, 2014 in Harvard auditorium Jefferson Hall 250.

Our Earth is 45 million centuries old. But this century is the first when one species ours can determine the biosphere’s fate. Threats from the collective “footprint” of 9 billion people seeking food, resources and energy are widely discussed. But less well studied is the potential vulnerability of our globally-linked society to the unintended consequences of powerful technologies not only nuclear, but (even more) biotech, advanced AI, geo-engineering and so forth. More information here.

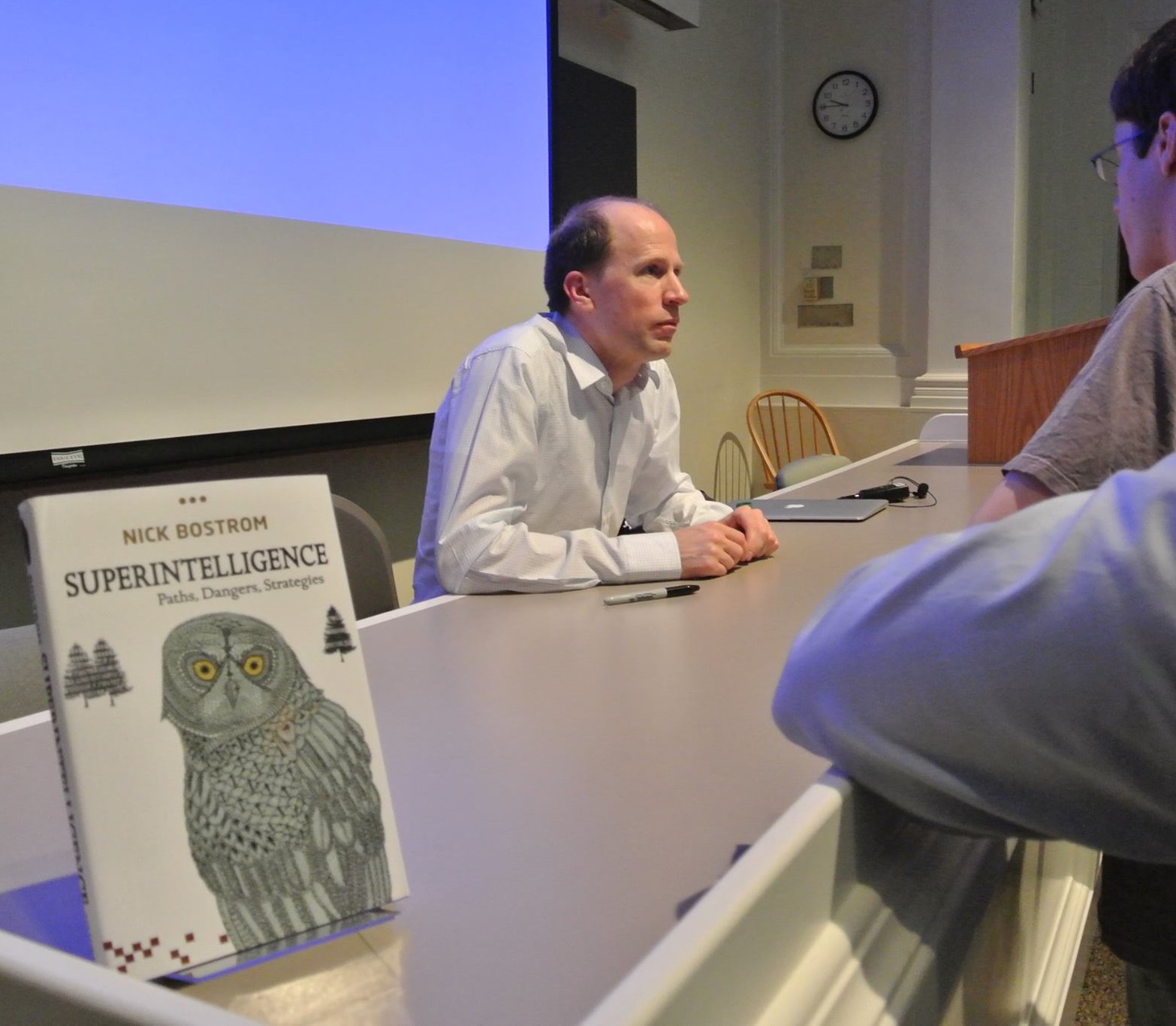

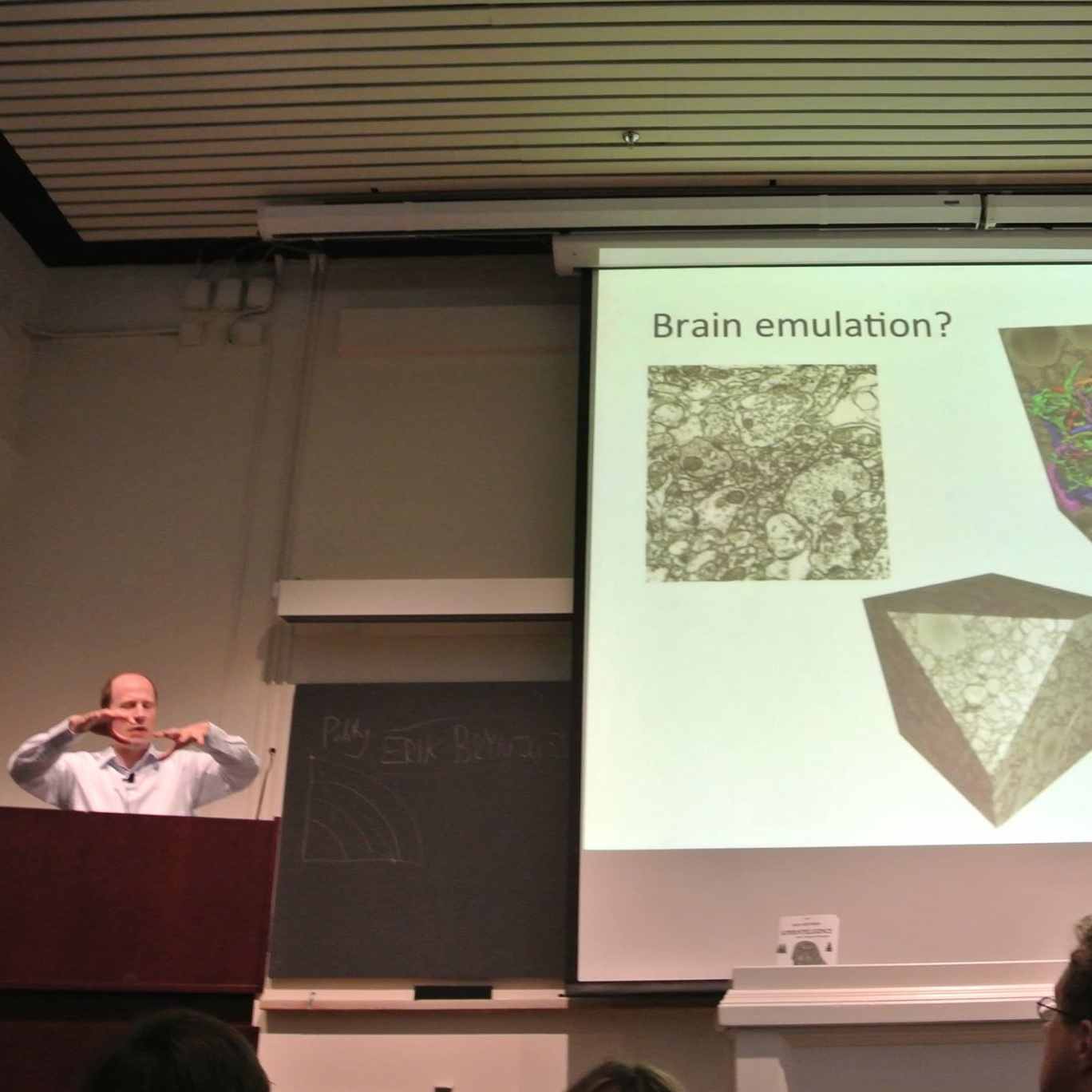

Nick Bostrom: Superintelligence — Paths, Dangers, Strategies

/in Past Events /by adminThis event was held Thursday, September 4th, 2014 in Harvard auditorium Emerson 105.

What happens when machines surpass humans in general intelligence? Will artificial agents save or destroy us? In his new book – Superintelligence: Paths, Dangers, Strategies – Professor Bostrom explores these questions, laying the foundation for understanding the future of humanity and intelligent life.

| Photos from the talk | ||

|---|---|---|

|

|

|

Max Tegmark: “Ask Max Anything” on Reddit

/in Past Events /by adminMax Tegmark answers the questions of reddit.com’s user base! Questions are on the subject of his book “Our Mathematical Universe”, physics, x-risks, AI safety, and AI research.

The Future of Technology: Benefits and Risks

/in Past Events /by adminThis event was held Saturday May 24, 2014 at 7pm in MIT auditorium 10-250 – see video, transcript and photos below.

The coming decades promise dramatic progress in technologies from synthetic biology to artificial intelligence, with both great benefits and great risks. Please watch the video below for a fascinating discussion about what we can do now to improve the chances of reaping the benefits and avoiding the risks, moderated by Alan Alda and featuring George Church (synthetic biology), Ting Wu (personal genetics), Andrew McAfee(second machine age, economic bounty and disparity), Frank Wilczek (near-term AI and autonomous weapons) and Jaan Tallinn (long-term AI and singularity scenarios).

- Alan Alda is an Oscar-nominated actor, writer, director, and science communicator, whose contributions range from M*A*S*H to Scientific American Frontiers.

- George Church is a professor of genetics at Harvard Medical School, initiated the Personal Genome Project, and invented DNA array synthesizers.

- Andrew McAfee is Associate Director of the MIT Center for Digital Business and author of the New York Times bestseller The Second Machine Age.

- Jaan Tallinn is a founding engineer of Skype and philanthropically supports numerous research organizations aimed at reducing existential risk.

- Frank Wilczek is a physics professor at MIT and a 2004 Nobel laureate for his work on the strong nuclear force.

- Ting Wu is a professor of Genetics at Harvard Medical School and Director of the Personal Genetics Education project.

| Photos from the talk | ||

|---|---|---|

|

|

|