Existential Risks Are More Likely to Kill You Than Terrorism

People tend to worry about the wrong things.

According to a 2015 Gallup Poll, 51% of Americans are “very worried” or “somewhat worried” that a family member will be killed by terrorists. Another Gallup Poll found that 11% of Americans are afraid of “thunder and lightning.” Yet the average person is at least four times more likely to die from a lightning bolt than a terrorist attack.

Similarly, statistics show that people are more likely to be killed by a meteorite than a lightning strike (here’s how). Yet I suspect that most people are less afraid of meteorites than lightning. In these examples and so many others, we tend to fear improbable events while often dismissing more significant threats.

One finds a similar reversal of priorities when it comes to the worst-case scenarios for our species: existential risks. These are catastrophes that would either annihilate humanity or permanently compromise our quality of life. While risks of this sort are often described as “high-consequence, improbable events,” a careful look at the numbers by leading experts in the field reveals that they are far more likely than most of the risks people worry about on a daily basis.

Let’s use the probability of dying in a car accident as a point of reference. Dying in a car accident is more probable than any of the risks mentioned above. According to the 2016 Global Challenges Foundation report, “The annual chance of dying in a car accident in the United States is 1 in 9,395.” This means that if the average person lived 80 years, the odds of dying in a car crash will be 1 in 120. (In percentages, that’s 0.01% per year, or 0.8% over a lifetime.)

Compare this to the probability of human extinction stipulated by the influential “Stern Review on the Economics of Climate Change,” namely 0.1% per year.* A human extinction event could be caused by an asteroid impact, supervolcanic eruption, nuclear war, a global pandemic, or a superintelligence takeover. Although this figure appears small, over time it can grow quite significant. For example, it means that the likelihood of human extinction over the course of a century is 9.5%. It follows that your chances of dying in a human extinction event are nearly 10 times higher than dying in a car accident.

But how seriously should we take the 9.5% figure? Is it a plausible estimate of human extinction? The Stern Review is explicit that the number isn’t based on empirical considerations; it’s merely a useful assumption. The scholars who have considered the evidence, though, generally offer probability estimates higher than 9.5%. For example, a 2008 survey taken during a Future of Humanity Institute conference put the likelihood of extinction this century at 19%. The philosopher and futurist Nick Bostrom argues that it “would be misguided” to assign a probability of less than 25% to an existential catastrophe before 2100, adding that “the best estimate may be considerably higher.” And in his book Our Final Hour, Sir Martin Rees claims that civilization has a fifty-fifty chance of making it through the present century.

My own view more or less aligns with Rees’, given that future technologies are likely to introduce entirely new existential risks. A discussion of existential risks five decades from now could be dominated by scenarios that are unknowable to contemporary humans, just like nuclear weapons, engineered pandemics, and the possibility of “grey goo” were unknowable to people in the fourteenth century. This suggests that Rees may be underestimating the risk, since his figure is based on an analysis of currently known technologies.

If these estimates are believed, then the average person is 19 times, 25 times, or even 50 times more likely to encounter an existential catastrophe than to perish in a car accident, respectively.

These figures vary so much in part because estimating the risks associated with advanced technologies requires subjective judgments about how future technologies will develop. But this doesn’t mean that such judgments must be arbitrary or haphazard: they can still be based on technological trends and patterns of human behavior. In addition, other risks like asteroid impacts and supervolcanic eruptions can be estimated by examining the relevant historical data. For example, we know that an impactor capable of killing “more than 1.5 billion people” occurs every 100,000 years or so, and supereruptions happen about once every 50,000 years.

Nonetheless, it’s noteworthy that all of the above estimates agree that people should be more worried about existential risks than any other risk mentioned.

Yet how many people are familiar with the concept of an existential risk? How often do politicians discuss large-scale threats to human survival in their speeches? Some political leaders — including one of the candidates currently running for president — don’t even believe that climate change is real. And there are far more scholarly articles published about dung beetles and Star Trek than existential risks. This is a very worrisome state of affairs. Not only are the consequences of an existential catastrophe irreversible — that is, they would affect everyone living at the time plus all future humans who might otherwise have come into existence — but the probability of one happening is far higher than most people suspect.

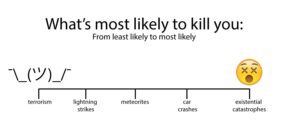

Given the maxim that people should always proportion their fears to the best available evidence, the rational person should worry about the above risks in the following order (from least to most risky): terrorism, lightning strikes, meteorites, car crashes, and existential catastrophes. The psychological fact is that our intuitions often fail to track the dangers around us. So, if we want to ensure a safe passage of humanity through the coming decades, we need to worry less about the Islamic State and al-Qaeda, and focus more on the threat of an existential catastrophe.

*Editor’s note: To clarify, the 0.1% from the Stern Report is used here purely for comparison to the numbers calculated in this article. The number was an assumption made at Stern and has no empirical backing. You can read more about this here.

*Editor’s note: To clarify, the 0.1% from the Stern Report is used here purely for comparison to the numbers calculated in this article. The number was an assumption made at Stern and has no empirical backing. You can read more about this here.

What about aging? In my opinion there are two main possible causes of my future death – aging and x-risks. Both may result in my death in 20-40 year. It also seams that EA movement underestimate aging as number 2 problem.

Alexey: Great point. What comes to mind, in this context, is that aging isn’t an existential risk, and the article specifically concerns existential risks. To be sure, aging is a global and terminal risk, but it lacks the transgenerational component that’s necessary for an event to quality as existentially risky. (These properties are seen best in my typology of x-risks — which I know you’re familiar with! — rather than Bostrom’s: http://goo.gl/WI6Gcf.)

Thoughts? I’m perpetually open to feedback!

It is true, but is we look closely, the only value difference would be the value of future generations, that is similar to the value of unborn children. This makes unexpected bridge with anti-abortion movement, as they seems to put the value of unborn children very high, but no put much value on existing people.

Anyway most people give very small value to future generations, especially as a practical value in real acts, not claimed value.

May be we need to make stronger value connection with x-risks, if we want real action. People were afraid of nuclear catastrophe because it could affect them at the moment. So they thought about in “near mode” and were ready to protest against it. People also give a lot of value to their children and grandchildren, but almost 0 to 6th generation after them.

“only two things are unavoidable in our life – dying and taxing” – Benjamin Frankling

Life is impossible without death, aging is the gradual entence to death. no use to fear it:-)))

Olga I suggest you try reading http://www.nickbostrom.com/fable/dragon.html

Can Americans be trusted even? to answer honestly re existential risk of even super partial extinction through climate change. They would rather die than think to misquote B. Russell. Which means extinction is a possibility. Not good. So what is happening? Nothing! Brilliant. Fantastic. Some future. The Punks were prescient when they declaimed that there is ‘no future’

Lutz: Would you mind elaborating a bit? It’s not clear to me what your point is. Sorry!

Pandemic or Epidemic have always been my go to slides for the end coming in my lifetime or my childrens lifetime. Although I do believe that a nuclear war can and will happen someday, I don’t think it will be in this lifetime for me. I’m 42. Some of the best minds do have a fear of more existential risks like Sam Harris for example… He vehemently believes that AI will be the death of man and the human race ultimately and he has gone on record as such. This is purely speculation at this point I know, and the truth is that our personal fears drive us in a direction to believe that which we fear the most is going to someday catch up with us and kill us. Deep seeded fear and paranoia always seem to win when it comes to these type of speculation events happening.